Google launched TensorFlow Serving today, a new open source project that aims to help developers take their machine learning models into production. Unsurprisingly, TensorFlow Serving is optimized for Google’s own TensorFlow machine learning library, but the company says it can also be extended to support other models and data.

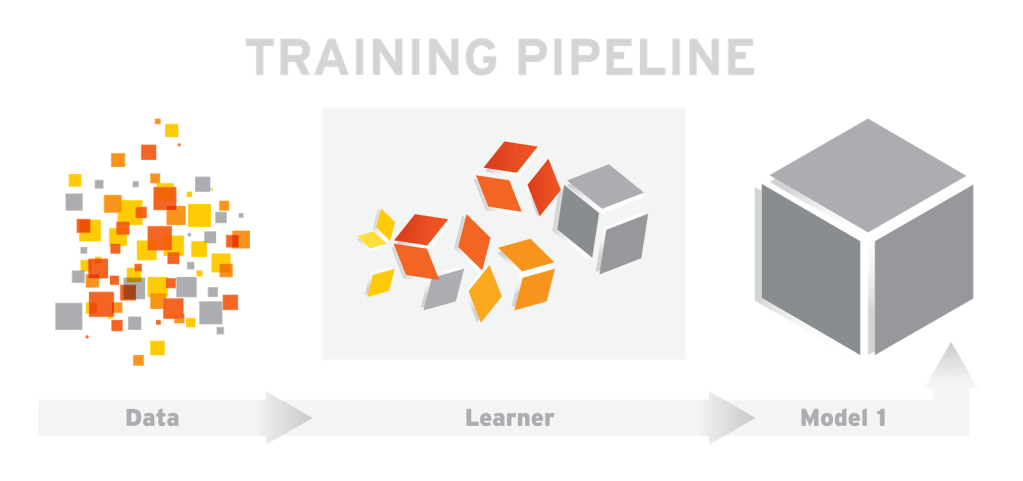

While projects like TensorFlow make it easier to build machine learning algorithms and train them for certain types of data inputs, TensorFlow Serving specializes in making these models usable in production environments. Developers train their models using TensorFlow and then use TensorFlow Serving’s APIs to react to input from a client. Google also notes that TensorFlow Serving can make use of available GPU resources on a machine to speed up processing.

As Google notes, having a system like this in place doesn’t just mean developers can take their models into production faster, but they can also experiment with different algorithms and models and still have a stable architecture and API in place. In addition, as developers refine the models or its output changes based on new incoming data, the rest of the architecture still remains stable.

As Google notes, TensorFlow Serving is written in C++ (and not Google’s own Go). The software is optimized for performance, and the company says it can handle over 100,000 queries per second on a 16-core Xeon machine.

The code for TensorFlow Serving — as well as a number of tutorials — is now available on GitHub under the Apache 2.0 license.

Source: http://feedproxy.google.com/~r/Techcrunch/~3/OqtZokEBUXQ/